SpeechPrompt v1

An Exploration of Prompt Tuning on Generative Spoken Language Model for Speech Processing Tasks

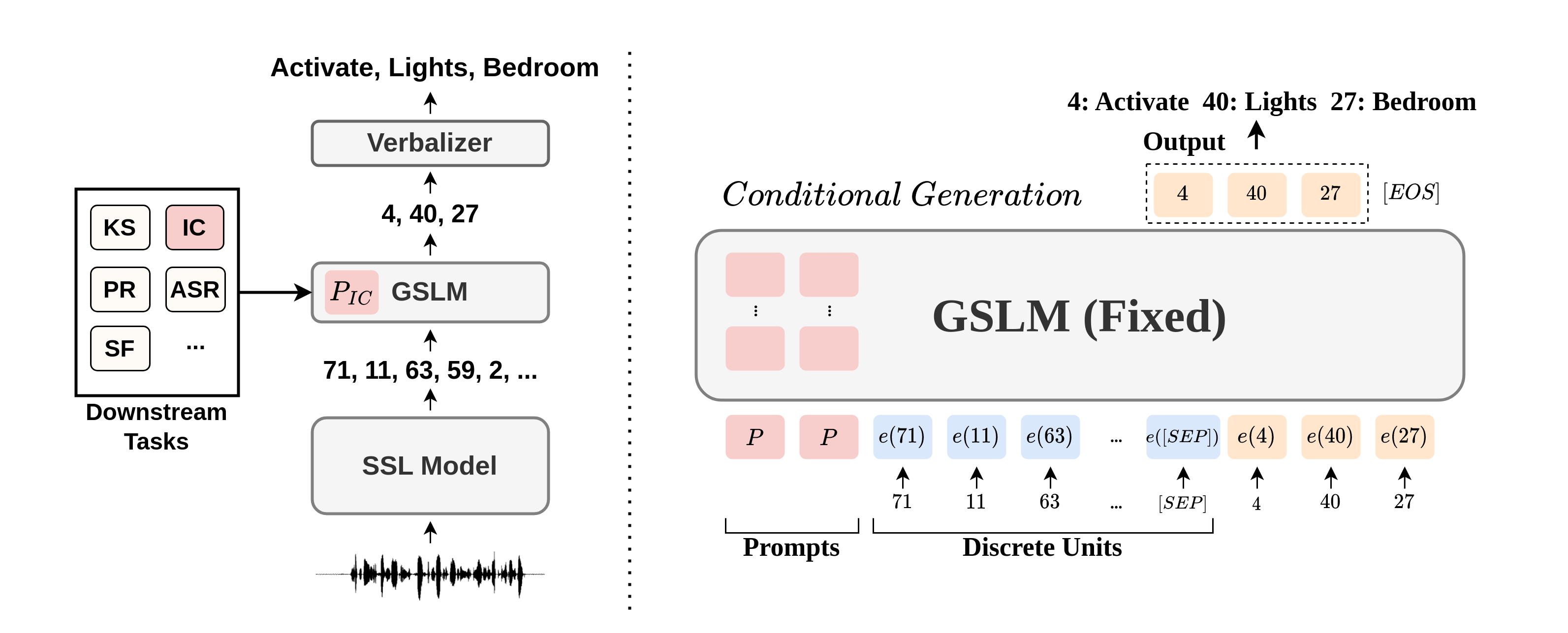

Recently, prompting in Natural Language Processing (NLP) has been found to be an

efficient technique to leverage pre-trained language models (LMs). Specifically, prompt

tuning optimizes a limited number of task-specific parameters with a fixed pre-trained

model; as a result, only a small set of parameters is needed to be stored for each task.

Prompt tuning improves computation and memory efficiency by leveraging the pre-trained

LM's prediction ability. Nevertheless, such a paradigm is little studied in the speech

community.

We report in this paper the first exploration of the prompt tuning paradigm for speech

processing tasks based on Generative Spoken Language Model (GSLM). Experiment results

show that the prompt tuning technique achieves competitive performance in speech

classification tasks with fewer trainable parameters than fine-tuning specialized

downstream models. We further study the technique in challenging sequence generation

tasks. Prompt tuning also demonstrates its potential, while the limitation and possible

research directions are discussed in this paper.

Citation

@inproceedings{DBLP:conf/interspeech/ChangT0L22,

author = {Kai{-}Wei Chang and

Wei{-}Cheng Tseng and

Shang{-}Wen Li and

Hung{-}yi Lee},

title = {An Exploration of Prompt Tuning on Generative Spoken Language Model

for Speech Processing Tasks},

booktitle = {{INTERSPEECH}},

pages = {5005--5009},

publisher = {{ISCA}},

year = {2022}

}